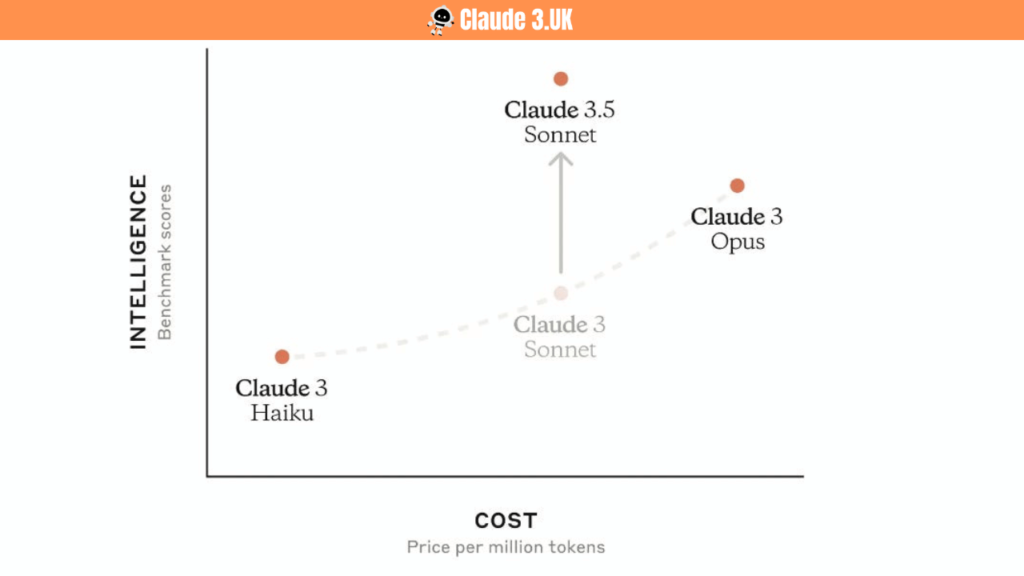

Anthropic’s Research on Corrigibility (ARC) is a crucial component of the ethical and safety features implemented in Claude 3.5 Sonnet, the advanced AI model released in 2024. ARC represents a significant step forward in creating AI systems that are not only powerful but also alignable with human values and intentions.

This article delves into the workings of ARC within Claude 3.5 Sonnet, exploring its principles, implementation, and implications for the future of AI development.

Understanding ARC

Definition and Core Principles

ARC, or Anthropic’s Research on Corrigibility, is a framework designed to make AI systems more corrigible – that is, more amenable to correction and oversight by humans. The core principles of ARC include:

- Transparency: Ensuring the AI’s decision-making process is understandable to human overseers.

- Interruptibility: Allowing for safe and effective interruption of the AI’s processes when necessary.

- Value Learning: Enabling the AI to learn and adapt to human values over time.

- Scalable Oversight: Maintaining human control even as the AI’s capabilities grow.

Historical Context

ARC builds upon years of research in AI alignment and safety. It draws inspiration from various concepts in the field, including:

- Cooperative inverse reinforcement learning

- Iterated amplification

- Debate and recursive reward modeling

Implementation in Claude 3.5 Sonnet

Architectural Integration

In Claude 3.5 Sonnet, ARC is not a separate module but an integral part of the model’s architecture. It influences various aspects of the model’s functioning:

- Attention Mechanisms: Modified to allow for human-directed focus

- Output Generation: Includes checkpoints for potential human intervention

- Learning Processes: Incorporate value learning from human feedback

Transparency Layers

Explainable AI Components

Claude 3.5 Sonnet incorporates explainable AI (XAI) techniques to make its decision-making processes more transparent:

- Attention Visualization: Graphical representations of what the model is focusing on

- Natural Language Explanations: The model can provide human-readable explanations for its outputs

- Decision Trees: For certain tasks, the model can present its reasoning in a tree-like structure

Interpretability Modules

To further enhance transparency, Claude 3.5 Sonnet includes specialized interpretability modules:

- Feature Attribution: Identifying which input features most influenced a particular output

- Counterfactual Explanations: Showing how changes in input would affect the output

- Concept Activation Vectors: Revealing high-level concepts the model is using in its reasoning

Interruptibility Mechanisms

Safe Interruption Protocols

Claude 3.5 Sonnet is designed with multiple layers of safe interruption:

- Soft Interrupts: Allowing for graceful pausing and resumption of tasks

- Hard Interrupts: Immediate cessation of all processes when necessary

- Gradual Slowdown: Option to progressively reduce the model’s processing speed for careful oversight

Checkpoint Systems

The model incorporates checkpoint systems at various stages of its operation:

- Regular Checkpoints: Predefined points where the model can safely pause for human review

- Dynamic Checkpoints: Automatically generated pause points based on task complexity or potential ethical concerns

- User-Defined Checkpoints: Allowing human operators to set custom review points

Value Learning Framework

Inverse Reinforcement Learning

Claude 3.5 Sonnet uses advanced inverse reinforcement learning techniques to infer human values:

- Preference Learning: Deriving value functions from observed human choices

- Reward Modeling: Constructing reward functions that align with human preferences

- Active Learning: Proactively seeking clarification on ambiguous value-related scenarios

Ethical Training Data

The model is trained on a diverse dataset of ethical scenarios and human judgments:

- Philosophical Thought Experiments: Exposing the model to classic and contemporary ethical dilemmas

- Real-world Case Studies: Training on actual ethical decisions made in various fields

- Cultural Diversity: Incorporating ethical perspectives from different cultures and traditions

Scalable Oversight

Hierarchical Control Structures

To maintain human control as the model’s capabilities grow, Claude 3.5 Sonnet implements hierarchical control structures:

- Task Decomposition: Breaking complex tasks into smaller, more manageable subtasks

- Oversight Levels: Different levels of human involvement based on task criticality

- Escalation Protocols: Mechanisms for elevating decisions to higher levels of human oversight

Meta-Learning for Oversight

The model employs meta-learning techniques to improve its ability to be overseen:

- Learning to Be Instructed: Improving the model’s ability to understand and follow human instructions

- Oversight Efficiency: Learning to present information in ways that facilitate effective human oversight

- Anomaly Detection: Identifying situations that may require increased human attention

Practical Applications of ARC in Claude 3.5 Sonnet

Decision Support Systems

In decision support applications, ARC enables Claude 3.5 Sonnet to:

- Provide transparent reasoning for its recommendations

- Allow for easy human override of suggestions

- Learn from human decisions to improve future recommendations

Content Generation

For content generation tasks, ARC ensures that:

- Generated content aligns with specified ethical guidelines

- Humans can easily intervene and modify the generation process

- The model learns to adapt its style and content based on feedback

Data Analysis and Research

In data analysis and research applications, ARC facilitates:

- Clear explanations of analytical methods and conclusions

- Easy interruption and modification of analysis processes

- Incorporation of domain expertise and ethical considerations in research methodologies

Challenges and Limitations

Balancing Autonomy and Control

One of the key challenges in implementing ARC is finding the right balance between the model’s autonomy and human control:

- Over-reliance on Human Oversight: Can potentially limit the model’s efficiency and scalability

- Insufficient Control: Risks the model making decisions beyond its ethical training

Complexity of Value Alignment

Aligning the model with human values is an ongoing challenge:

- Value Diversity: Dealing with the wide range of human values across cultures and individuals

- Changing Values: Adapting to evolving societal norms and ethical standards

- Edge Cases: Handling scenarios not covered in ethical training data

Technical Limitations

Current technical limitations include:

- Computational Overhead: ARC features can increase the model’s computational requirements

- Latency Issues: Real-time interruption and oversight can introduce delays in processing

- Scalability Concerns: Maintaining effective oversight for increasingly complex tasks

Future Directions

Advanced Value Learning

Future iterations of ARC in Claude models may include:

- Meta-Ethical Reasoning: Enabling the model to engage in higher-level ethical deliberation

- Cooperative Inverse Reinforcement Learning: More sophisticated techniques for inferring human preferences

- Multi-Agent Value Alignment: Navigating scenarios involving multiple stakeholders with potentially conflicting values

Enhanced Interpretability

Ongoing research aims to further improve the model’s interpretability:

- Neuromorphic Explanations: Providing insights into the model’s decision-making process in ways that mirror human cognitive processes

- Interactive Explanations: Allowing users to explore the model’s reasoning through dynamic, interactive interfaces

- Cross-Modal Interpretability: Extending explainability to multimodal inputs and outputs

Adaptive Oversight

Future versions may implement more adaptive oversight mechanisms:

- Contextual Oversight Levels: Automatically adjusting the level of human involvement based on the specific context and potential impact of decisions

- Predictive Interruption: Anticipating scenarios that may require human intervention before they occur

- Oversight Efficiency Optimization: Continuously improving the efficiency of human-AI interaction in oversight processes

Ethical Implications

Responsible AI Development

The implementation of ARC in Claude 3.5 Sonnet represents a commitment to responsible AI development:

- Proactive Approach to AI Safety: Addressing potential risks before they manifest

- Ethical Consideration Integration: Making ethical reasoning an intrinsic part of the AI system

- Human-Centric Design: Prioritizing human values and oversight in AI capabilities

Transparency and Trust

ARC’s focus on transparency can help build public trust in AI systems:

- Demystifying AI: Making AI decision-making processes more understandable to the general public

- Accountability: Providing clear mechanisms for tracing and explaining AI-driven decisions

- Ethical Auditing: Facilitating third-party ethical audits of AI systems

Societal Impact

The widespread adoption of ARC-like systems could have significant societal implications:

- Democratization of AI Oversight: Enabling broader participation in shaping AI behavior

- Ethical AI Literacy: Promoting public understanding of AI ethics and governance

- Human-AI Collaboration Models: Developing new paradigms for effective human-AI teamwork

Conclusion

The implementation of Anthropic’s Research on Corrigibility (ARC) in Claude 3.5 Sonnet represents a significant advancement in the field of AI ethics and safety. By integrating transparency, interruptibility, value learning, and scalable oversight into the core architecture of the model, ARC addresses many of the key concerns surrounding powerful AI systems.

As AI continues to grow in capability and influence, frameworks like ARC will be crucial in ensuring that these systems remain aligned with human values and intentions. The challenges of balancing autonomy with control, navigating the complexities of value alignment, and overcoming technical limitations remain ongoing areas of research and development.

The future directions of ARC, including advanced value learning, enhanced interpretability, and adaptive oversight, promise even more sophisticated and reliable AI systems. These developments have the potential to shape not only the technical landscape of AI but also its ethical, social, and philosophical implications.

Ultimately, the success of ARC in Claude 3.5 Sonnet and future AI systems will be measured by their ability to create powerful AI assistants that are not only highly capable but also fundamentally corrigible, transparent, and aligned with human values. As we continue to push the boundaries of AI capabilities, frameworks like ARC will play a crucial role in ensuring that our artificial intelligences remain powerful tools for human benefit rather than potential sources of uncontrolled risk.

FAQs

Q1: What is ARC in Claude 3.5 Sonnet?

A1: ARC stands for Anthropic’s Research on Corrigibility. It’s a framework implemented in Claude 3.5 Sonnet to make the AI system more amenable to correction and oversight by humans.

Q2: What are the core principles of ARC?

A2: The core principles include transparency, interruptibility, value learning, and scalable oversight.

Q3: How does ARC enhance transparency in Claude 3.5 Sonnet?

A3: ARC incorporates explainable AI techniques, attention visualization, and interpretability modules to make the model’s decision-making process more transparent.

Q4: What are the interruptibility mechanisms in ARC?

A4: ARC includes safe interruption protocols and checkpoint systems that allow for pausing, resuming, or stopping the model’s processes when necessary.

Q5: How does ARC implement value learning in Claude 3.5 Sonnet?

A5: It uses inverse reinforcement learning techniques and trains on diverse ethical datasets to align the model with human values.

Q6: What is scalable oversight in ARC?

A6: Scalable oversight involves hierarchical control structures and meta-learning techniques to maintain human control as the AI’s capabilities grow.

Q7: How does ARC affect decision support applications of Claude 3.5 Sonnet?

A7: It allows for transparent reasoning, easy human override, and learning from human decisions to improve future recommendations.